Topic modeling

Topic-modeling

Topic-modeling

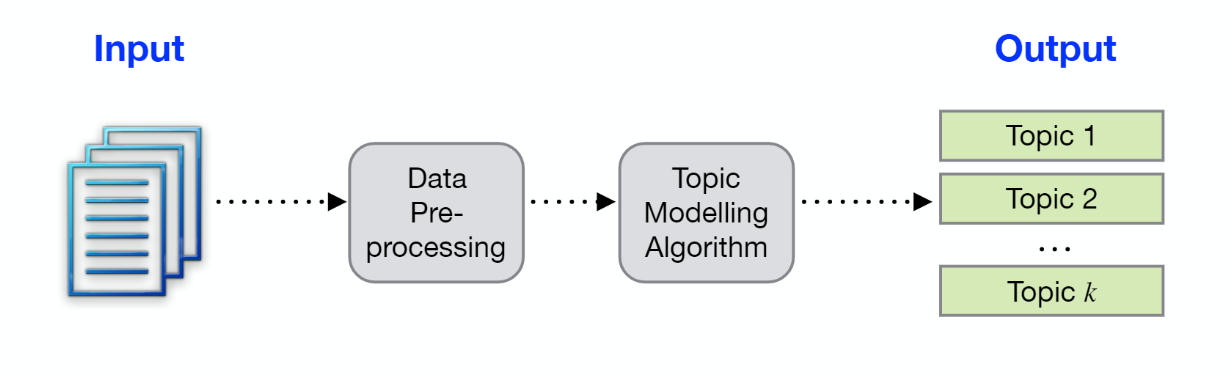

Summary: Identifying the different topics/ subjects in the document by accounting the frequency of occurrence of those topics and thereby ultimately classifying documents into their topics.

Topic modeling is a statistical model to discover semantic structures in the text body. The “topics” produced by topic modeling techniques are clusters of similar words. A document typically concerns multiple topics in different proportions; thus, in a document that is 10% about cats and 90% about dogs, there would probably be about 9 times more dog words than cat words. In this example, I have created a Topic modeling for 2 Wikipedia web pages using Latent Dirichlet allocation (LDA).

Latent Dirichlet allocation (LDA) is a generative statistical model that allows sets of observations to be explained by unobserved groups that explain why some parts of the data are similar. For example, if observations are words collected into documents, it posits that each document is a mixture of a small number of topics and that each word’s presence is attributable to one of the document’s topics.

Natural Language Toolkit (NLTK) provides text processing libraries for classification, tokenization, stemming, tagging, parsing, and semantic reasoning, wrappers for industrial-strength NLP libraries for Python.

I. Scraping web-pages for their content:

II. Storing the content in text files:

file = open('ParsedString3.txt','wb' )

for strings2 in soup1.stripped_strings:

strings2 = str(strings2)

file.write(strings2)

III. Creating a single document from a combination of the 2 text documents obtained from scraping webpages:

file1 = open("../ParsedString3.txt", 'r') file2 = open("../ParsedString4.txt", 'r')doc1 = file1.readlines() doc2 = file2.readlines()# compile sample documents into a listdoc_set = doc1 + doc2

IV. Importing libraries:

from nltk.tokenize import RegexpTokenizer

from stop_words import get_stop_words

from nltk.stem.porter import PorterStemmer

from gensim import corpora, models

import gensim

V. Cleaning and tokenizing:

raw = i.lower()

raw = raw.decode("utf8")

tokens = tokenizer.tokenize(raw)

where “i” is the element in the document list

VI. Removing stop words from token:

Stop words are those words which appear in higher frequency is the entire document and doesn’t prove to be of much importance is identifying the topics in document. These words are well defined in the library “stop_words”. Some examples are: and, the, or, is, ., ,, ?.

VII. Stemming tokens ?:

Stemming helps to reduce inflectional forms and sometimes derivationally related forms of a word to a common base form, thereby shortening the lookup, and normalizing sentences. Many variations of words carry the same meaning.

VIII. Bag-of-Words (BoW):

First, create a dictionary function, which assigns a unique id to each token in document text and collects relevant statistics( count). Create a bag-of-words from this dictionary which results in a text matrix of occurrence of the word. The output includes those words which are present in the list.

IX. LDA model:

Generate an LDA model with required specifications — corpus, the number of topics model needs to be designed for, input dictionary and input corpus, number of words in each pass

X. And the Topics are…

Call the LDA model to identify either 1, 2, 3 or more topics from the given list of words.

[(2, u’0.000 “u” + 0.000"oil”’), (1, u’0.232 “u” + 0.036 “lego”’)]Each generated topic is separated by a comma. Within each topic are the 2 most probable words to appear in that topic.

Originally published at https://adhira-deogade.github.io.

Comments

Post a Comment